top of page

Back stage

of Autonomous Robot

Autonomous robot Technology

Our Sensor Suite

Our Autonomous Driving Software

Earth Robotics has software, hardware, and robotics engineers working together. This integrated approach allows us to develop state-of-the-art strategies for our robot to safely navigation and interact with the human and other obstacles.

See

Our robot Lu perceive their surroundings through a multi-sensor suite. These sensors work together to provide our robot with a detailed 360-degree view of the world around it.

Go

Lu have extremely precise location and planning tools that direct our robot to the places our customers want to go. Additionally, we have developed the tools to build and regularly update high-definition 3D maps of the areas our robot drive.

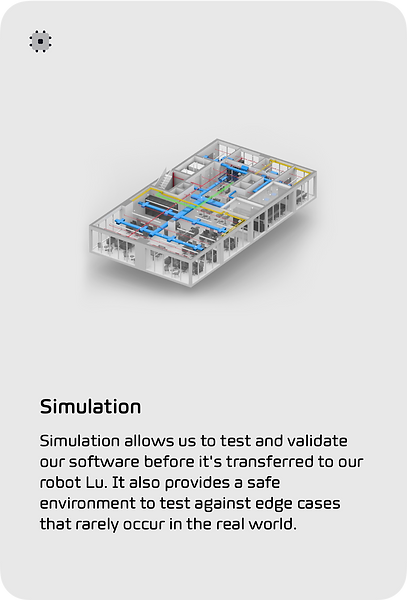

Learn

Lu are on the path to fully autonomous driving by learning from detailed simulation and controlled testing

Stuck

In the rare instance a robot stops in an unknown situation, it contacts a remote operations center, where an operator will help unblock the robot

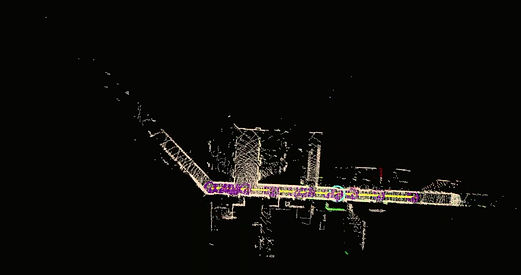

3D SLAM

Earth Robotics is building a fully integrated autonomous mobility system. Our system allows us to deliver an experience with safety incorporated by design into every aspect of our service.

Sensor Suite.

RADARS

CAMERAS

LIDAR

Lu use unique sensor architecture combining cameras, lidar, and radars to see their surrounding. This allows our robot to deal with many scenarios.

360 - Degree field of view

Our sensor placement provides an overlapping field of view and 360° coverage. This enables redundancy and for the robot to perceive in all directions equally well.

SEES OVER 131FT

Autonomous Robot Software

Lu is able to interact seamlessly between our physical sensor suite and our sophisticated autonomous software. Using state-of-the-art software, our robot senses its environment and can predict the upcoming movement. With this information, our robot plans and steers its trajectories. this software is a localization function that allows Lu to know precisely where he is at all times.

Perception

Our robot Lu can see their surroundings through computer vision technologies. They take the images and data from sensors to detect, track, and avoid all objects. Our state-of-the-art technology uses deep-learning methods to segment and classify objects from our sensor data.

Prediction

Robot Lu predict the future actions by using a complex software framework that integrates the following:

Domain-specific rules: Our software takes the context of the situation into account (e.g. a person direction).

Physics-based modeling: The software anticipates where a dynamic object will be, given its anticipated speed and acceleration (e.g. a person speed).

Data-driven machine-learned behavior modeling: Our robot interpret human behavior and use this information to anticipate the actions of dynamic objects (e.g. a guy is veering in a certain direction).

Mapping

High-fidelity maps are crucial for enabling autonomous robot to know exactly where they are. We are developing our own mapping technology as well as the maps themselves, which guarantees a high level of resolution and quality.

Planing & Control

Our planning methodology uses our software’s perception and prediction of what other road objects will do to plan a path for our robot. This enables our robot to drive where they need to go. Our software is constantly evaluating the robot surroundings and predictions of other road objects’ paths to plan its driving actions.

Our Indoor Mirror Map ( IMM)

IMM is a real-world replica in a digital environment created by our robots. In particular, digital data of buildings is an important element for smart cities, autonomous driving, service robots, XR, AR, and Metaverses. In addition, active public-private investment is taking place to give intelligence to cities.

Advanced Computer Vision

Color

Depth

Segmentation

Detected object

Point 3D map view

Local View

Indoor Localization

Our computer vision technique that allows us to identify and locate objects in an image or video., we frame object detection as a re-gression problem to spatially separated bounding boxes and associated class probabilities. A single neural network pre- dicts bounding boxes and class probabilities directly from full images in one evaluation. Since the whole detection pipeline is a single network, it can be optimized end-to-end directly on detection performance.

Elevator Control

Elevator control extends access capabilities to standard elevators

Powerful Compute System

Our robot Lu come with advanced hardware capable of providing Autonomous features, and full self-driving capabilities—through software updates designed to improve functionality over time.

AI

Lu navigation system utilizes an advance AI to improve its performance.

bottom of page